Simplify Reinforcement Learning (RL) processes with a dynamic, web-based platform designed to visualize and optimize decision-making tasks.

What is Reinforcement Learning?

Reinforcement learning (RL) is a powerful AI-driven method in machine learning for addressing sequential decision-making problems. RL agents learn optimal behaviors through trial and error, receiving feedback from their environment to improve performance over time. This cutting-edge approach enables transformative applications across industries, including autonomous driving, robotics, healthcare, finance, and gaming.

Features

Interactive RL Visualization

AutoRL X introduces RL visualizations and web-based real-time tools to better understand RL agent behaviors and performance.

Open-Source Expansion

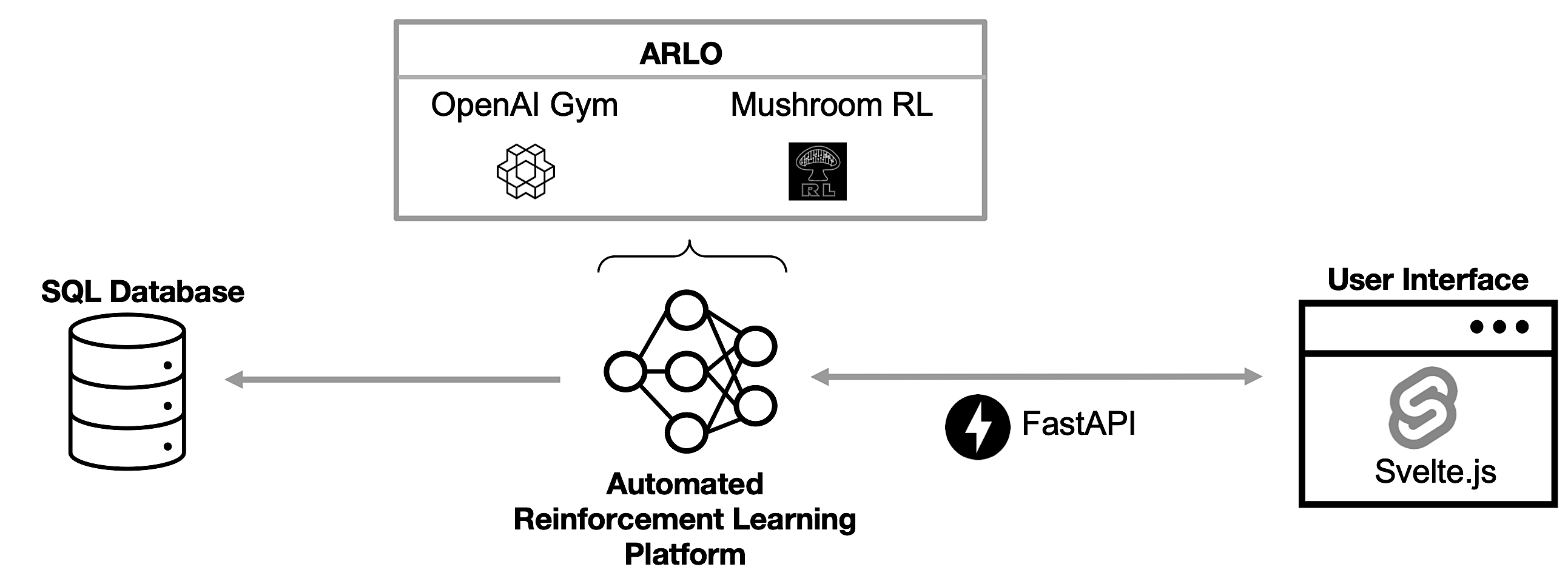

With frameworks like ARLO and Svelte.js, AutoRL X ensures a smooth and extensible user experience for both researchers and practitioners.

Real Applications

Explore real-world applications, such as optimizing tasks for autonomous driving, robotics, healthcare, production finance, and gaming.

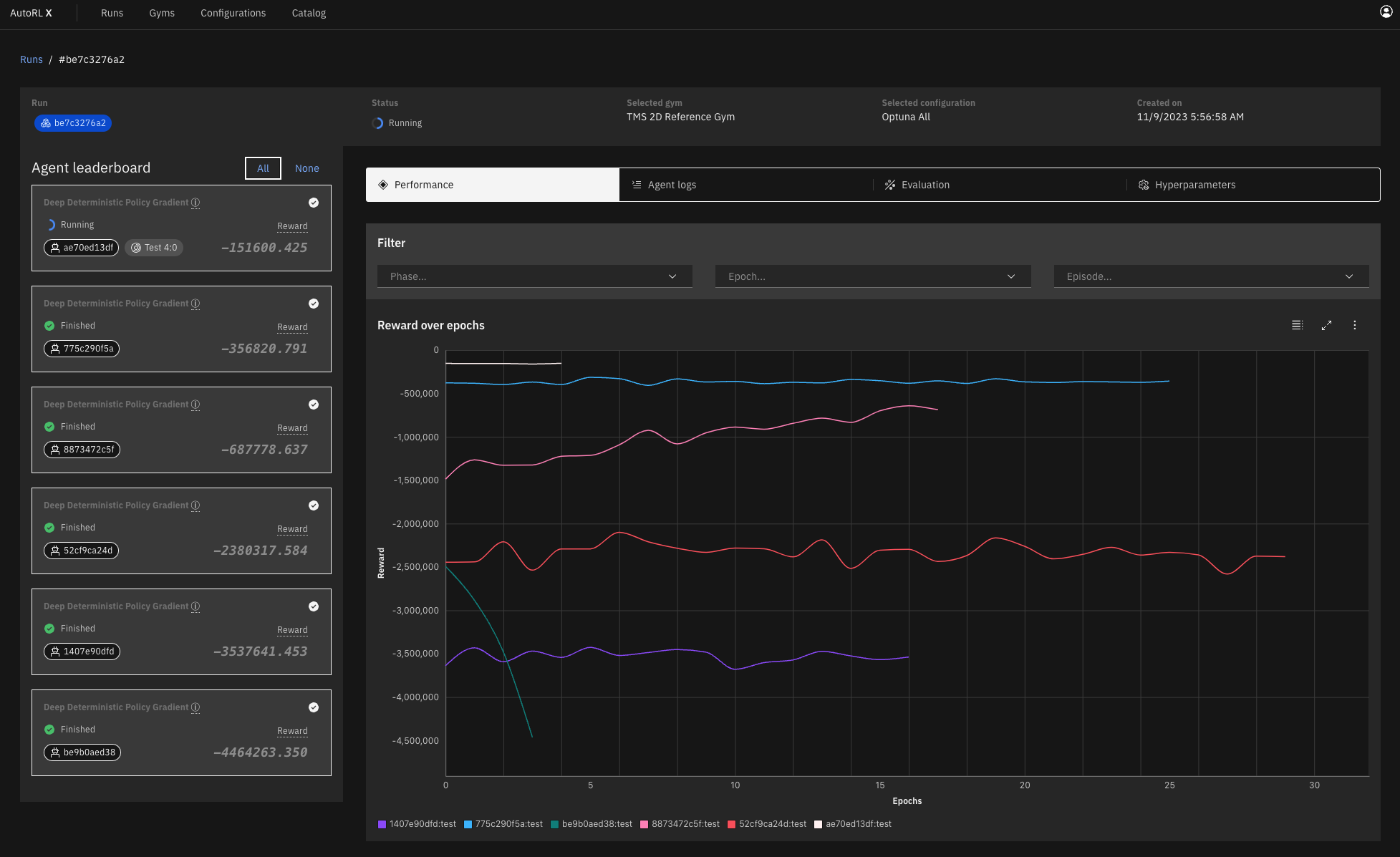

Agent Leaderboard: Running a gym environment with different RL algorithms in AutoRL X's agent leaderboard. Users can select different agent configurations on the left and add them to the line chart to explore and compare their progress in real-time. In the menu above the chart users can filter by different phases, epochs, iterations, and actions of the RL model/agent, besides the option to select different tabs with agent logs, visited states, hyperparameters, and learned policies.

3D Visualization in Edit Gym: Users can create flexible TypeScript code to design a 3D environment that dynamically visualizes an agent's actions within AutoRL X. This example showcases agent trajectories within a simulated gym, providing improved interpretability and insights into RL agent behavior.

Installation Overview

AutoRL X can be deployed quickly using Docker for a hassle-free setup. Alternatively, advanced users can run it in developer mode for custom configurations. Detailed installation instructions are available in our GitHub repository.

About AutoRL X

AutoRL X is an open-source platform designed to simplify reinforcement learning (RL) processes. It provides real-time web-based visualizations and intuitive interfaces for decision optimization, making RL accessible and effective for diverse applications. By bridging the gap between complex RL workflows and user-friendly tools, AutoRL X empowers industries such as healthcare, robotics, production, and autonomous systems to tackle real-world challenges with confidence and efficiency.

Innovative and Extensible

Built on frameworks like ARLO and Svelte.js, AutoRL X integrates seamlessly with industry-standard RL libraries such as OpenAI Gym and Gymnasium.

For more information, explore our GitHub repository or read our ACM publication.

Need Help?

For support, feel free to reach us at or submit an issue on our GitHub repository. For general inquiries, partnership opportunities, or collaboration requests, contact us at .